Automated scoring options in evaluation forms

Prerequisites

- Genesys Cloud CX 3, Genesys Cloud CX 4, Genesys Cloud CX 2 WEM Add-on I, Genesys Cloud CX 1 WEM Add-on II or Genesys Cloud EX license + AI Tokens

- Quality administrator permissions

- Quality > Evaluation Form > Edit AI scoring

- Optionally, you set the Quality > Evaluation > View Sensitive Data permission and see the reasoning that AI uses to determine why the selected answer was chosen.

Genesys offers automated scoring capabilities for evaluation questions, enabling supervisors to spend more time coaching and less time on manual interaction reviews. These capabilities streamline quality management processes and help agents continuously improve. By transforming conversation data into actionable insights, both Genesys AI Scoring and Evaluation Assistance deliver measurable improvements in service quality and operational efficiency. Both solutions support voice and digital interaction types.

AI Scoring Overview

Genesys AI Scoring is an AI-driven capability that automates the evaluation of customer interactions. Rather than depending solely on manual assessments, it uses AI models trained against predefined quality criteria to score conversations at scale.

This results in faster, more consistent, and more objective evaluations, eliminating human bias and ensuring that a larger, more representative set of interactions is reviewed. In addition to automated scoring, AI Scoring provides detailed answer explanations that supervisors can use as targeted coaching feedback to enhance agent performance.

Evaluation Assistance Overview

Evaluation Assistance leverages topics to automate answers to specific evaluation form questions. Topics consist of phrases that represent business intents. For example, to detect interactions where a customer wants to cancel a service, you could create a topic named Cancellation and include phrases such as “close out my account” or “I want to cancel.”

These topics improve recognition of organization-specific language by tuning the underlying language models to look for the terms and phrases that matter most to your business.

When an evaluation assistance condition is added to a form question, speech and text analytics identify interactions that contain the associated topics. If a matching topic is found, the system automatically generates the answer to that question.

For more information, see: Understand programs, topics, and phrases, and Work with a topic.

- Create a question group. For more information, see Create and publish an evaluation form.

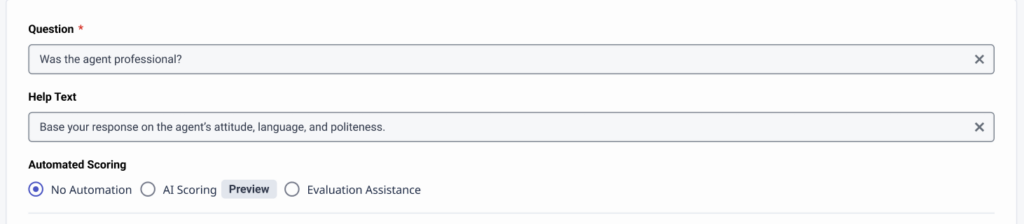

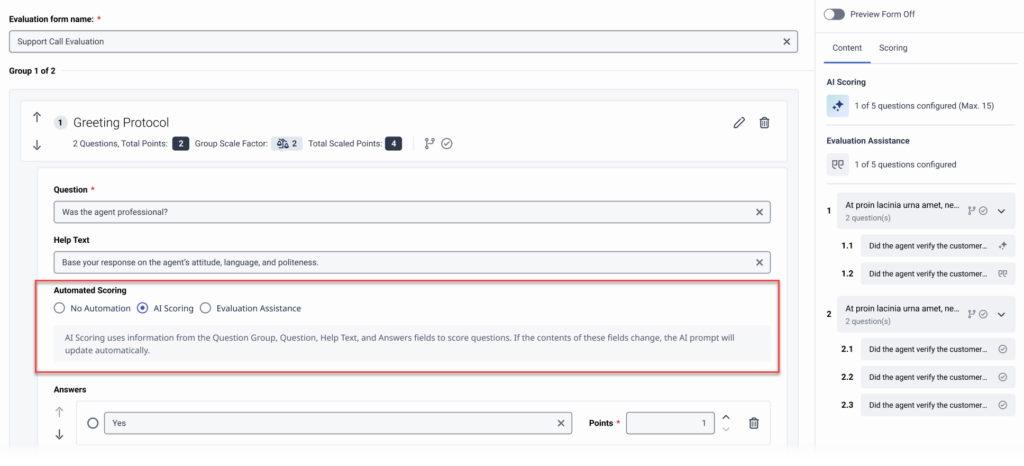

- When you add a new question, you can set automated scoring for each question.

The options are:- No Automation

- AI Scoring

- Evaluation Assistance

- Save the question and finish the evaluation form setup. For more information, see Create and publish an evaluation form.

You can enable AI scoring per question within the evaluation form editor to have Genesys Cloud use AI to prefill the answer on an evaluation.

Create question prompts

The prompt is constructed automatically from the text of the question group, the question, the answers, and the help text. If you rephrase unclear questions and add context to these fields, you can improve the effectiveness of your questions for AI scoring.

Example unclear question 1: “Was the customer satisfied?”

- Clear Question for AI Scoring: “Did the agent resolve the customer’s issue to their satisfaction by the end of the conversation?”

- Help Text: “Focus on whether the agent addressed the customer’s main concerns and if the customer expressed positive feedback or indicated satisfaction at the end.

Example unclear question 2: “Did the agent greet the customer properly?”

- Clear Question for AI Scoring: “Did the agent greet the customer with a friendly tone and mention their name at the start of the call?”

- Help Text: “Check if the agent greeted in a warm, polite manner and used the customer’s name within the first 30 seconds of the call, setting a friendly tone.”

Prompt filters

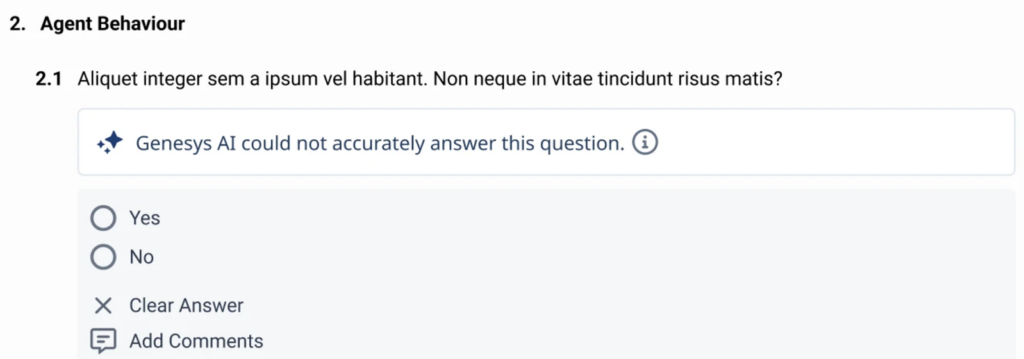

Configured questions for AI scoring use a prompt filter, so Genesys Cloud only provides answers to questions where the AI model’s answer has a high confidence level.

- Answer limit: You cannot save an AI scoring configuration for questions that have more than three answer options.

- N/A or no evidence: In this case, the AI model has no sufficient information to answer a question. Genesys Cloud displays the context when the AI model cannot provide an answer.

- Historical Accuracy: Genesys Cloud continually reviews stored data from AI-generated scoring answers to assess their reliability. Accuracy is measured by how frequently a human evaluator modifies an AI-provided answer. When accuracy reaches an acceptable threshold, Genesys Cloud automatically displays that AI-generated answer for future evaluations that include the same question. Historical accuracy is calculated using the most recent 25 submissions for each question. If accuracy within this rolling window drops below 75%, the question is flagged with a low-confidence warning and the AI-generated answer is hidden from evaluators. However, Genesys Cloud continues generating and storing AI answers in the background, allowing accuracy to be reassessed as new evaluations come in. Once the accuracy returns to at least 75%—based on the same 25-submission window—the low-confidence flag is removed, and the AI-generated answer becomes visible to evaluators again.

- If you change and republish a form, Genesys Cloud resets the tracked history of questions with configured AI scoring.

- Genesys Cloud tracks the history for a particular question for up to 30 days. If there is no activity for the question after 30 days, the history is deleted.

- For more information about AI Scoring guidelines and best practices. see AI scoring best practices.

- For more information about how to create Agent Auto complete evaluation forms, see Agent auto complete form type.

Add an evaluation assistance condition to a form question

- Create an evaluation form question.

- Click Add Evaluation Assistance Condition that is associated with the answer for which you want an answer to be automatically generated.

- From the select if conversation list, select if you want the conversation to include or exclude one or more topics.

- From the these topics list, select one or more topics.

- (Optional) Click the Add Evaluation Assistance Condition option again to add another condition to the same answer.