How the AI model scores agents for predictive routing

Genesys predictive routing uses artificial intelligence (AI) to route interactions to the best agent. The agent score derived by predictive routing is based on a diverse set of data points including skill level of agents, a customer’s contact history, and an agent’s performance at a certain time of day. You can better interpret this data and how it impacts routing decisions by understanding the concept of explainability.

What is explainability and why is it important?

Explainability is the ability to explain the rationale behind AI-driven decisions to non-technical users. Explainability helps organizations fulfill moral and legal obligations for the data that they process. By using the available data, explainability helps alleviate any fears of misuse of data and an introduction of bias using the available data. It does this by providing transparency on the data or features used by the AI model and the importance of those features.

What does feature importance mean in the decision-making process?

Feature importance is a score for input features based on how useful they are in predicting the target variable. While feature importance indicates the data, which, has the most influence on decisions, it does not necessarily indicate that the data caused the decision. For example, you have two input features for predicting the temperature on any given day: the month of the year and the previous day’s temperature. If you have access to both inputs, the prediction for today’s temperature will be accurate with a deviation of only +/- 1 degree. If the only input you had was the previous day’s temperature, the prediction might be within +/- 2 degrees. However, if the only input you used was the month, you might only be able to predict the temperature within a range of +/- 4 degrees.

Therefore the importance of the previous day’s temperature as an input feature is higher than the importance of the month, because in isolation it helps provide a better prediction relative to other inputs.

Does correlation imply causation?

Correlation does not imply causation. While the value of certain features may help predict an outcome, it cannot be assumed that they caused a particular outcome. Ice cream sales and incidences of sunburn are highly correlated; when ice cream sales are high, we can predict a high incidence of sunburn. It does not however follow that ice cream causes people to get sunburned, or that banning ice cream eradicates sunburn. Hence we must not assume that attempting to change the value of an important feature impacts other metrics.

Why explainability in predictive routing?

Predictive routing uses machine learning models to score the agents who might handle an interaction most effectively. To do the scoring, the model uses features created from various internal sources including agent profile data, aggregated customer data, and historic interaction data.

With explainability, predictive routing attempts to unpack the factors that influenced the agent scoring. This helps stakeholders such as administrators, supervisors, business owners, data scientists, and solution consultants understand the data that is being used in AI-assisted decisions.

To understand explainability better, as an example, you see interactions being regularly routed to a specific pool of agents and you choose to investigate the reasons behind the same. Let’s assume that the KPI you set for your queues is Average Handle Time, and you observe that for the predictive model on that queue, one of the most important features is after call work (ACW) time. From the explainability features, we understand that the agents who spend least time on ACW are predicted to have the lowest handle time and are therefore ranked high.

Where can I see explainability in Genesys Cloud?

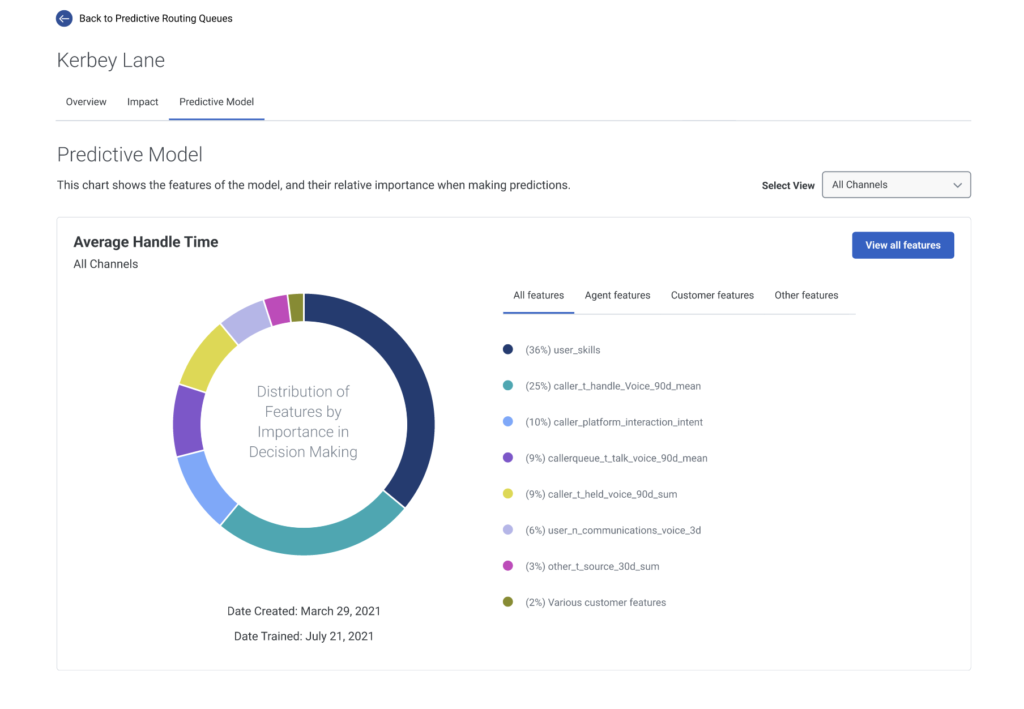

Each queue on which predictive routing is active has its own predictive model. For each model, explainability segregates agent scoring features into three buckets: agent features, customer features, and other features. The features that influenced the agent scoring with respect to the KPI set are listed in descending order in order of importance.

Besides the feature-related information, the Predictive Model page also provides information on the date the model was last trained. For more information about interpreting feature importance, see View features that influenced predictive routing decisions.

The Predictive Model page provides a feature-wise split as follows:

How can AI decisions be changed based on the current explainability data?

Predictive routing uses data that is readily available in Genesys Cloud platform for training models. While most of the data used in the models cannot be modified, there are some optional features, which can be populated to improve predictions. For more information, see Data requirements for predictive routing.