Genesys Virtual Agent

What's the challenge?

Contact centers are growing volume at a rapid pace. Hiring Agents to balance that volume is expensive and not quick enough. Contact Centers are looking to provide Agent like experiences to consumers quickly and efficiently. As consumer preference and increasingly advanced self-service options move “easy” questions out of the contact center, agents are left with complex issues to solve, for customers who have expectations that are higher than ever before. This can lead to increased interaction transfers. Virtual Agents are here to help! Using the power of Generative AI and Large Language Models (LLMs). They can mimic a more natural conversation, like an agent, and can work though complex issues that might arise.

What's the solution?

Virtual Agent allows our customers to autonomously begin, and complete conversations in real time. The VA will use LLMs to understand what the customer wants to accomplish, walk them through a task following a business process, or surface the appropriate knowledge articles to get the job done. Virtual Agents can be deployed onto both voice and digital channels, all built in a no-code editor within Genesys Architect. Virtual Agents can be trained from existing customer transcripts through the Intent Miner tool to get started more quickly. After a VA completes a conversation, it will use Generative AI to perform the same after call work activities like writing a summary, tagging a wrap code, and providing next steps. AI-led self-service empowers companies to scale their customer interactions while improving the experience. This in turn improves first contact resolution rates and reduces transfers.

Use case overview

Story and business context

The rise of digital and voice channels has led to heightened customer expectations and a significant increase in the volume of interactions that companies must manage when servicing their customers. As organizations increasingly embrace Artificial Intelligence (AI), many are implementing virtual agents to engage with customers. Virtual Agents answer queries and automate various tasks across digital platforms such as websites, mobile apps, social media, SMS, and messaging applications.

Virtual agents play a crucial role in alleviating the pressure on contact center employees while enhancing the overall customer experience and managing costs effectively. Virtual agents operate 24/7, providing immediate assistance answering questions, performing tasks and seamlessly handing over to a human agent whenever necessary.

Genesys’ innovative hybrid approach combines Dialog Engine Bot Flows and advanced Generative AI and LLM technology, delivering an exceptional experience for customers and agents.This hybrid approach provides the trust and transparency in a more traditional flow based experience, while making the conversation more flexible and dynamic through the use of LLMs.

For customer service agents, the benefits are equally impressive. They receive concise summaries of conversations handled by virtual agents, allowing them to quickly grasp the context and address customer needs with speed and accuracy. Similar LLMs and Generative AI are found in Agent Copilot, to help make agents more efficient.

End customers will experience a new level of service as our virtual agents respond in a natural, conversational manner. When they have questions, they won’t have to search through lengthy articles; instead, answers are highlighted or generated to their specific question. Whether interacting through digital channels or voice, our virtual agents understand customer needs and capture essential information to complete tasks seamlessly.

Moreover, when a virtual agent successfully concludes a conversation, it wraps up just like a human agent would, writing a summary, and assigning a wrap-up code that provides valuable insights into performance. This allows stakeholders to easily measure effectiveness and continuously improve customer service.

Use case benefits

| Benefit | Explanation |

|---|---|

| Improved Customer Experience | Virtual Agent can handle more complex business inquiries and resolve customer queries quicker. |

| Improved First Contact Resolution | Reduce repeat callers through accurate resolution the first time |

| Reduced Transfers | More accurately identify customer intent, routing to the right queue |

| Reduced Handle Time | Customer queries are resolved in less time as Virtual Agent uses LLMs to provide highly accurate information to resolve them as quickly as possible. |

Summary

Genesys Virtual Agents use LLMs to help customers find the information they are looking for to resolve their queries quickly and with high accuracy. Virtual Agents can understand the customers intent and provide quick and accurate responses. They have the ability to summarize an answer from a single article so that they can address the customers question directly, rather than giving a verbose response that customer must read. Virtual Agents provide more human level contextual understanding than other bots as well as having the ability to hold complete conversations, store the history and provide automatic wrap-up codes.

Use case definition

Business flow

- An interaction is initiated (reactive or proactive) across a supported channel.

- The customer receives a standard welcome message from the VA.

- Customer information and/or context is retrieved from:

-

- Customer profile information in External Contacts

-

- API call to third-party data source

- The customer receives a personalized message or is handed over to an agent. Examples include:

-

- Custom message or update: “Your next order is due to arrive on Thursday before 12.”

-

- Personalized welcome message: “Hello Shane, welcome to Genesys Cloud. How can I help you?”

-

- Customer is handed over directly to an agent because they owe an outstanding balance.

- Assuming the customer has moved on from the Personalization stage, the conversation continues with the VA, which asks an open-ended question like: “How may I help you?” to determine intent and capture the customer’s [BL1]

-

- If intent and slots are returned, the conversation moves to the correct point in the interaction flow, for example;

- “I see you want to book an appointment for Friday, what time?”

-

- The VA continues to follow the steps in the task to complete the interaction by:

-

- Collecting additional information, using LLMs to understand a variety of responses

-

- Display a knowledge article specific to the task at hand

-

- Interact with a back end system via a Data Action

- Display an article from the knowledge base and:

-

- Highlight the relevant text in the article to answer the user’s question

-

- Generate a personalized response using a RAG (Retrieval Augmented Generation) model

-

- Handoff to live agent

- Upon completion of a task, the VA asks if there are any follow ups, by asking something like: “Is there anything else I can help you with?”

-

- If the customer responds “yes,” they return to Step 5: “How may I help you?”

-

- If the customer responds “no,” then the conversation returns to the interaction flow

-

- If the customer responds with a more advanced answer, then determine intent and entities for further processing.

- Customer information and/or context is retrieved to determine whether to offer a survey.[BL2]

-

- If a survey is offered, the interactions is sent to a chatbot.

-

- If no survey is offered, the interaction flow shows a goodbye message and ends

- The survey is executed. The survey questions are configurable by the customer on a business-as-usual basis in the chatbot and therefore no dialog flow is defined here.

- The interaction flow presents a goodbye message and ends the chat

- The VA writes a summary of what occurred, tags any wrap up codes using a Large Language Model, and either transfers to an agent to continue assisting the conversation, or closes the interaction.

Business and distribution logic

Business Logic

NLU:

- Intents: The goal of the interaction. For example, a “book flight” intent returned by the NLU will direct a user to the related task and walk them through the process.

- Slots: Additional pieces of key information required to complete a task or answer a question. For example “Book a flight to Paris” would extract the slot for “Destination” with the key word of Paris. Slots can be filled in the initial request or throughout the flow when needed.

LLM:

- Large Language Model: LLMs are trained by simply providing a description of what needs to be done. For example, a “book flight” intent would have a description of something like “a user is requesting to book a flight with the airline” and no matter how a user might ask for this, the LLM will identify which intent they are requesting

- LLMs are also used to extract slots. Again, simply give a description like “The city the user wants to fly to” and using its pre-existing knowledge of the world, the LLM will identify any city the user might request.

- Retrieval Augmented Generation (RAG): Virtual Agents can use RAG models to first Retrieve the correct article from the knowledge base, Augment the query to the LLM using the article, and Generate a personalized response to the users question. RAG models limit the knowledge of the LLM to only the business content provided in the article for increased accuracy and domain knowledge, while still maintaining the highly conversational nature of an LLM.

BL1: Agent Handoff: The customer can ask to connect to an available agent. At that point, the Virtual Agent disconnects and the chat transcript (excluding sensitive data) appears in the agent desktop. The VA will also send a summary of the conversation so far.

BL2: Survey: The customer can determine whether to address a survey or not. This survey can be based on:

- Customer profile information in External Contacts

- Customer journey data

- API call to third-party data source

User interface & reporting

Agent UI

Chat transcript between customer and Virtual Agent is populated in the chat interaction window in the agent desktop. The summary is surfaced in the interaction panel.

Reporting

Real Time Reporting

With Genesys Cloud, you can do flow reporting and use flow outcomes to report on VA and Bot Flow intents.

See the Flows Performance Summary view and use flow outcome statistics to help you determine performance issues for specific VA and Bot Flows and gather data about self-service success. Use the VA and Bot Flow flow data to improve outcomes. Note: Flow outcome statistics requires the customer to implement flow outcomes.

Use the Flows Performance Detail view to see a breakdown of metrics by interval for a specific VA and Bot Flow flow, and to see how VA and Bot Flow interactions enter and leave a chat flow.

The Flow Outcomes Summary view displays statistics related to chats that enter Architect flows. These statistics can help you determine how well your VA and Bot Flow flows serve customers and gather data about self-service success.

Historical Reporting

In the knowledge optimizer dashboard, you can analyze the effectiveness of your knowledge base. In this view, you can see the following metrics:

- All queries in a specific time frame and the breakdown, in percentages, of answered and unanswered queries.

- All answered queries in a specific time frame and the breakdown, in percentages, of the application from which the conversation originated.

- All unanswered queries in a specific time frame and the breakdown, in percentages, of the application from which the conversation originated.

- Top 20 articles and the frequency in which an article appeared in a conversation.

- Top 20 answered queries and the frequency in which each answered query appeared in a conversation.

- Top 20 unanswered queries and the frequency in which each unanswered query appeared in a conversation.

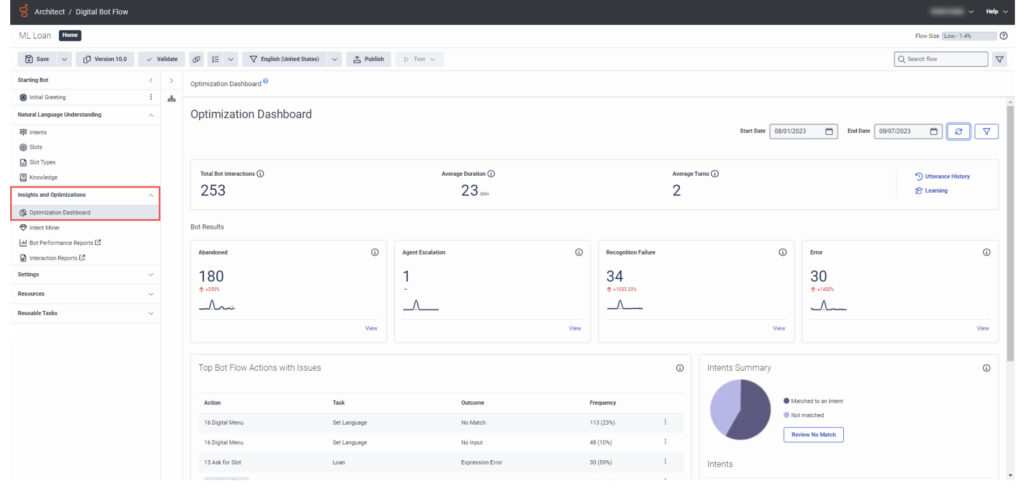

The Bot Optimizer Dashboard can be used Architect to view performance and high-level operational metrics for a selected Genesys Dialog Engine Bot Flow or Genesys Digital Bot Flow. This data helps you improve and troubleshoot your VA and Bot Flow. You can also filter these results by specifying a date range or a configured language.

Customer-facing considerations

Interdependencies

General assumptions

Customers and/or Genesys Professional Services are responsible for managing the Virtual Agent NLU, rules engine and uploading their own knowledge base articles into Genesys Knowledge Workbench to be used by Virtual Agent.

Customer responsibilities

- The Customer needs to provide a KB or the articles that will be the elements of the Knowledge Base.

- Flow Outcome Statistics capabilities require the customer to implement flow outcomes. They do not come out of the box.

Related documentation

Document version

V 1.0.0